Powerful tools for your creative needs

Generate stunning AI videos

Transform your videos with AI

FramePack: Revolutionizing Video Generation with Next-Frame Prediction AI

Generate long, high-quality videos effortlessly on consumer GPUs with FramePack’s innovative frame compression and anti-drift technology. Experience real-time frame-by-frame video creation that feels as seamless as image diffusion.

FramePack Features: Advanced AI Video Generation Made Practical

Next-Frame Prediction Neural Network

Generates videos progressively by predicting each subsequent frame with high accuracy.

Constant-Length Frame Compression

Compresses input context to maintain consistent workload regardless of video length, enabling long videos without extra resource cost.

Low VRAM Requirements

Runs efficiently on consumer-grade GPUs with as little as 6GB VRAM, including laptop RTX 30XX and 40XX series.

Long-Duration Video Support

Capable of generating videos up to 120 seconds (3600 frames at 30fps) without quality degradation.

Anti-Drift Sampling

Uses bidirectional context to prevent visual quality loss and frame drift over time.

Real-Time Frame Preview

See each second of your video as it generates, allowing immediate feedback and adjustments.

High Batch Size Training

Supports large batch sizes similar to image diffusion models, improving training efficiency and output quality.

Cross-Platform Compatibility

Available for Windows and Linux with easy installation and cloud options for users with limited hardware.

Optimized for Speed

Generation speeds of 1.5 to 2.5 seconds per frame on high-end GPUs, with scalable performance on lower-end devices.

Open Source and Community Supported

Developed by renowned AI researcher lllyasviel, with active GitHub repository and community tutorials.

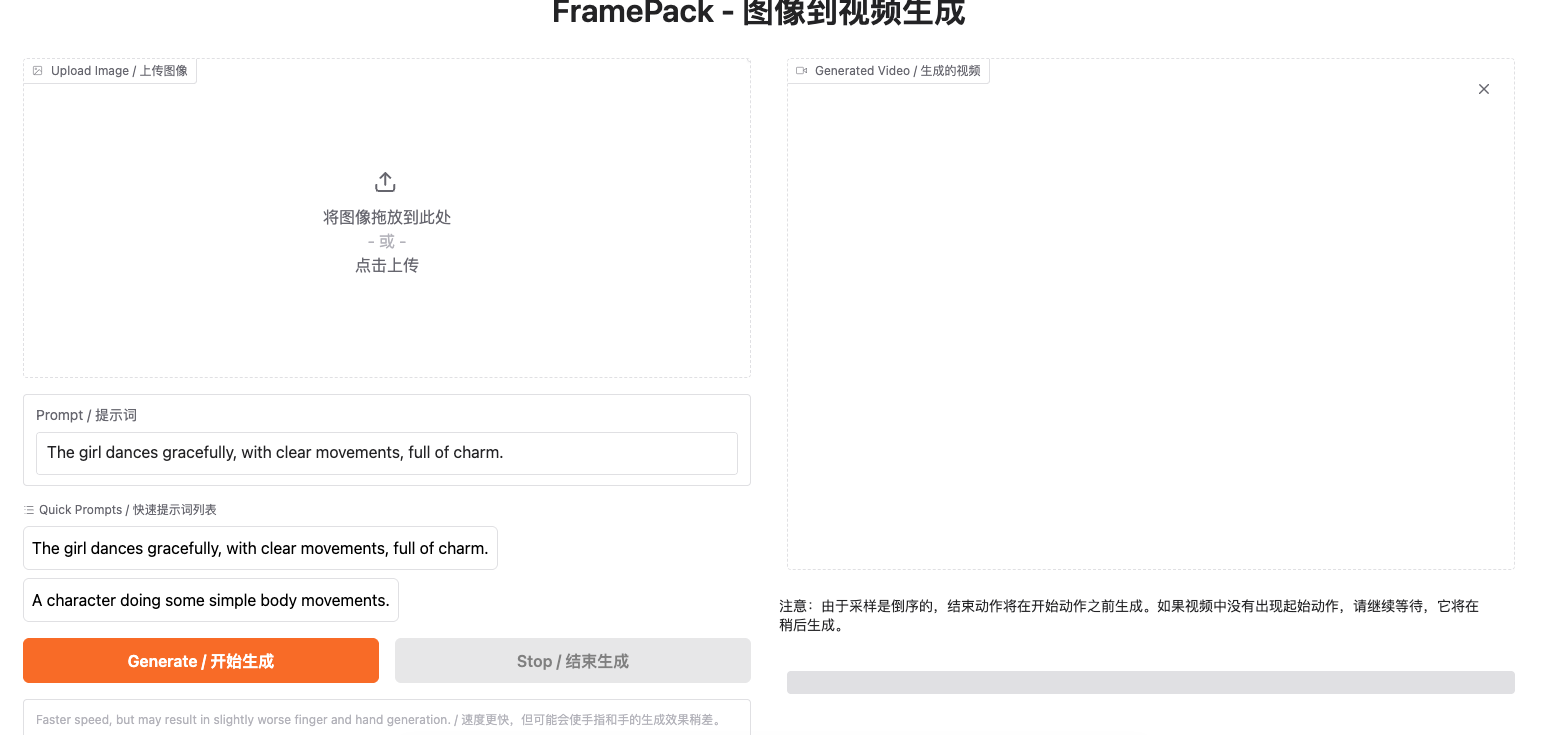

How to Use FramePack for Video Generation

- 1Download and Install: Get the FramePack desktop software for Windows or Linux, ensuring your system has an Nvidia RTX 30XX, 40XX, or 50XX GPU with at least 6GB VRAM.

- 2Prepare Your Input: Use a single image or a short video clip as the input context for FramePack’s next-frame prediction model.

- 3Configure Settings: Set desired video length (up to 120 seconds), frame rate (30fps), and output resolution. FramePack automatically manages VRAM usage efficiently.

- 4Start Generation: Launch the generation process. FramePack progressively generates each frame, showing real-time previews as your video builds.

- 5Review and Export: Once complete, review the smooth, consistent video output and export it for your projects.

FramePack FAQ: Everything You Need to Know

Everything you need to know about our Image to Prompt technology

What is FramePack?

FramePack is an AI-powered next-frame prediction neural network designed to generate long, high-quality videos progressively by compressing input frame context to maintain consistent performance.

What hardware do I need to run FramePack?

FramePack requires an Nvidia RTX 30XX, 40XX, or 50XX series GPU with at least 6GB of VRAM. It supports both Windows and Linux operating systems.

How long of a video can FramePack generate?

FramePack can generate videos up to 120 seconds long at 30 frames per second, totaling 3600 frames, without increasing VRAM usage or slowing down generation.

How does FramePack handle video quality over time?

FramePack uses anti-drift sampling and a frame compression mechanism that allocates more resources to frames closer to the prediction target, preventing quality degradation and forgetting in long videos.

Can I see the video as it is being generated?

Yes, FramePack provides real-time frame-by-frame previews during generation, allowing you to monitor progress and make adjustments if needed.

Is FramePack suitable for laptops or low-end GPUs?

Yes, FramePack is optimized to run on laptops with RTX 30XX series GPUs and requires only 6GB VRAM, making high-quality video generation accessible on consumer hardware.

Does FramePack support cloud-based video generation?

Yes, there are cloud deployment options such as RunPod and Massed Compute for users who want scalable GPU resources or have limited local hardware.

Where can I find installation and usage tutorials?

Comprehensive tutorials and installation guides are available on GitHub and YouTube, including step-by-step instructions for Windows local installs and cloud setups.

Is FramePack open source?

Yes, FramePack’s official implementation and desktop software are open source and maintained on GitHub by the developer lllyasviel.

What makes FramePack different from other video generation tools?

FramePack’s unique frame compression and anti-drift techniques allow it to generate long, stable videos efficiently on low VRAM hardware, with real-time previews and consistent quality unmatched by many competitors.